One of my professional and personal goals for 2023/24 is to provide a data visualization to the Tableau Iron Viz competition that happens every year at the annual Tableau Conference. This is something that I’ve wanted to submit to for quite a few years but always seemed to miss the deadline. This year, I’m preparing and keeping myself accountable by writing these blog posts while writing down what I’ve learned along the way.

I’m not sure how often I’ll be updating this blog, especially going into the Summer 2023, but with October just four months away (submissions for Tableau Iron Viz 2023 were in October), now seems like a good time as any to make it happen!

What is the Tableau Iron Viz?

Tableau Iron Viz is a competition which invites Tableau users from around the world to create compelling and innovative visualizations based on a given dataset theme. For example, Tableau Iron Viz 2023’s theme was “Games” and here are all the entries. A committee of Tableau Community judges will review the submissions and score them based off of Design, Storytelling, and Analysis. From there, the top 15 entries and three (3) finalists will be selected to attend the Iron Viz Championship where the finalists are tasked with creating captivating data visualizations within a limited time frame in front of a live audience.

Why am I entering?

The Iron Viz slogan is apt – Win or Learn — You Can’t Lose. In this case, it’s as simple as that. The goal here is for me to learn as much as I can and be comfortable with a 30-day submission deadline. Therefore, as mentioned in the intro, the intention is to prepare and document everything I can as I continue to build out an example of my own choosing. At the time of writing, I’ve decided to tackle a localized dataset to Winnipeg using the City of Winnipeg Open Data Portal.

The dataset: Winnipeg City Council Member Expenses

I decided to go with the City of Winnipeg’s Council Member Expenses dataset which contains over 32,000 rows of data, dating back to 2014. I felt as though this is a fair amount of data to play with as it contains a fun measure of Amount Spent with the ability to group these into many different dimensions including Council Member, City Ward, Department, and the Vendor in which the expense was made to.

The dashboard I’m envisioning at this time is something interactive that allows the user to segment by their own Ward and/or Council Member as well as an aggregate of all the Council Members, Wards, and Vendors over time. I don’t believe something of this caliber exists for Winnipeggers today (although maybe CBC Manitoba has done it in the past). Regardless, my goal is to have an ongoing, scheduled extract of data that accessible to the public in a visualized manner while learning in the process.

Note: This dataset isn’t set in stone. I may also look at exporting all the City of Winnipeg’s 311 Calls as that, too, is a fun dataset to look at.

Server set-up and scheduled extracts

Despite this being just “Part 1” of this journey, I have to say it’s been the most time consuming. Isn’t 80% of all data analysis just finding and cleaning the data anyway? Again, the goal here was to create a scheduled extract on an ongoing basis. I don’t want to have a one-off download of a CSV file as it would only provide a snapshot up to that exact moment in time.

To counteract this, I decided to look into a tool that I’ve used in the past (and I absolutely love) is n8n.io. While they have a decent monthly cloud-based subscription, I wanted to go the cheaper route by installing my own free community version on my own server.

Therefore, here are the steps I went about to set this thing up:

- I purchased a new domain for CA$5.00 / year from Hover.com

- I purchased a basic Toronto-based droplet server from Digital Ocean for CA$7.00 / month and installed a copy of Docker Compose

- I installed the free community version of n8n.io workflow automation tool

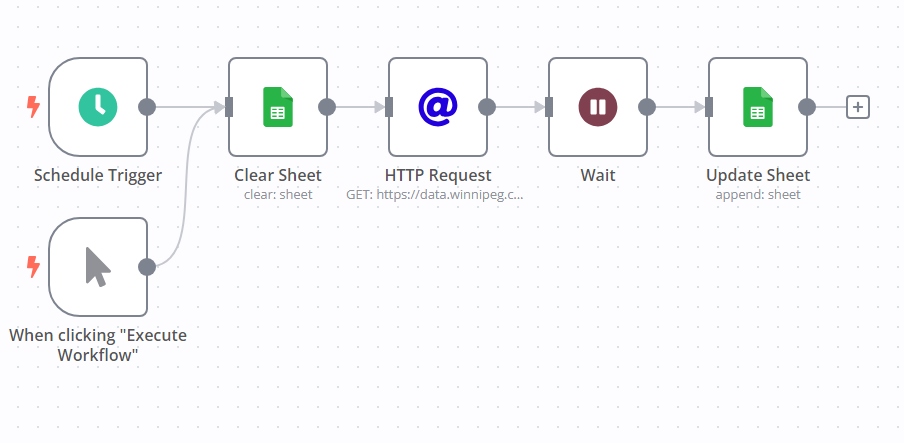

- I built a simple weekly scheduled automation to extract the data from Open City Winnipeg into a Google Sheet

Now that I have my Google Sheet being updated on a weekly basis, I can move onto Part 2 of this journey, playing around with the data.